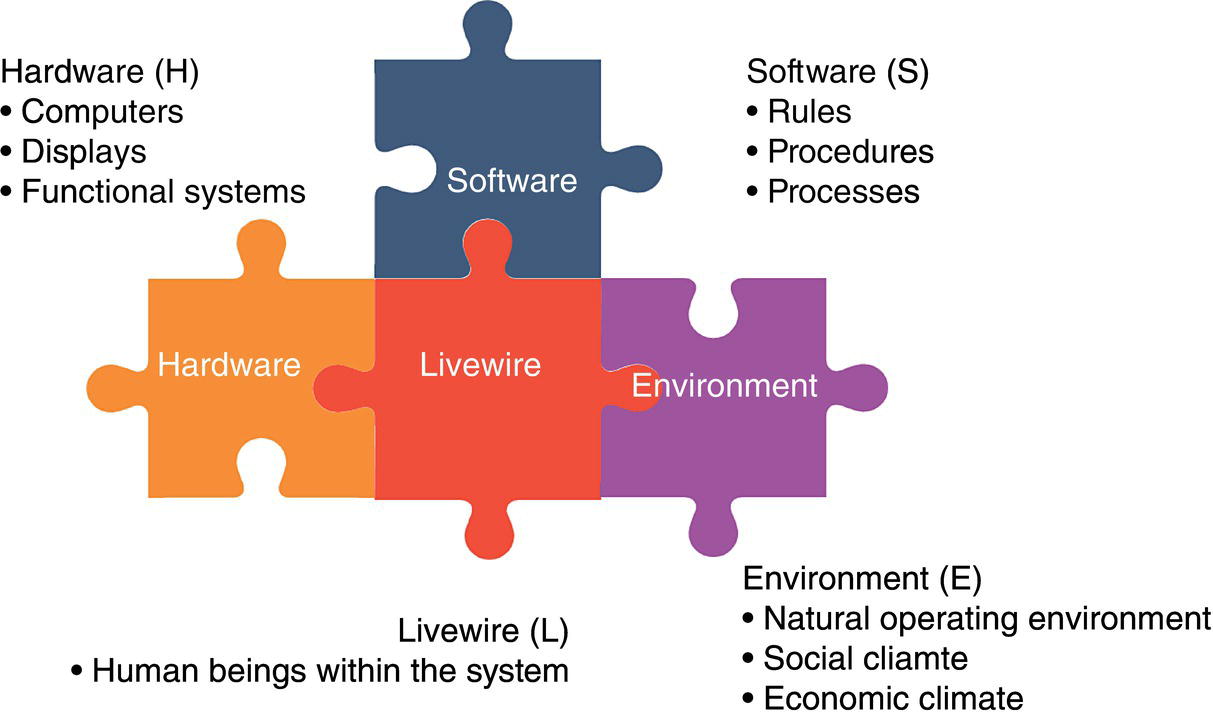

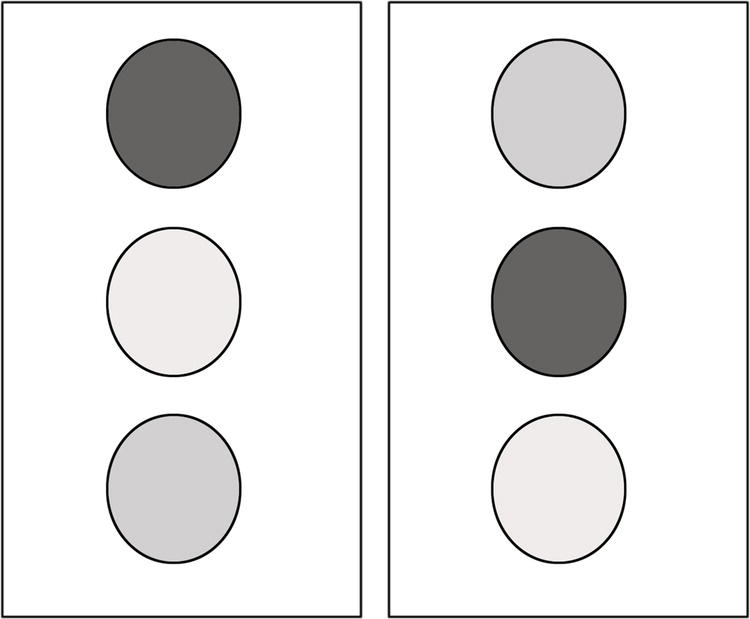

Lorna Harron Harron Risk Management Ltd., Edmonton, Alberta, Canada As important as the technical components of a design, construction, operation, and maintenance program is the human component of the activities being performed. According to the International Labor Organization Encyclopedia of Occupational Health and Safety [1], a study in the 1980s in Australia identified that at least 90% of all work-related fatalities over a 3-year period involved a behavioral factor. When an incident investigation is performed, human factors are often lumped into the category of “human error” as either the immediate cause or a contributory cause. While this acknowledges the human as a contributor to an undesirable event, it does not provide sufficient details to facilitate reduction of the potential for recurrence of the human error. When up to 90% of incidents are related to a human factor, it is important to understand human factors and how to reduce the potential for human error in our business. This chapter is designed to provide the reader with a better understanding of human factors and how to manage the potential for human error in his/her organization. “Human factors” is the study of factors and tools that facilitate enhanced performance, increased safety, and/or increased user satisfaction [2]. These three end goals are attained through the use of equipment design changes, task design, environmental design, and training. “Human factors” is therefore goal oriented, focused on achieving a result rather than following a specific process. When studying human factors, the end product focuses on system design, accounting for those factors, psychological and physical, that are properties of the human element. “Human factors” centers the design process on the user to find a system design that supports the user’s needs rather than making the user fit the system. Other technical fields interact with or are related to human factors, such as cognitive engineering and ergonomics. Cognitive engineering focuses on cognition (e.g., attention, memory, and decision making). Cognitive engineering impacts how a human or machine thinks or gains knowledge about a system. Ergonomics is related to human factors, focusing on the interaction of the human body with a workspace or task. It is well understood in industry that improvements at the design stage of a project cost significantly less than improvements postconstruction, so management of human factors at the design stage of a project will provide greatest value (Table 14.1). Designing for the human, known as user-centered design, permits the creation of systems that work with the human in mind. Rather than fitting the human into a system, a system is designed based on how the human will use it. When considering implementation of a new technology, and how a human will use the technology, human–system integration (HSI) is a key component of the user-centered design. HSI is a system design approach intended to ensure that human characteristics and limitations are considered, with an emphasis on selection of the technology, training, how the human will use the system in operation, and safety [3]. A methodology for defining the system, so that interactions between the human and the system can be defined, is the SHEL model (Figure 14.1), a human factors framework model that divides human factors issues into four components, software, hardware, environment, and liveware (people) [4]. Software refers to the processes and procedures used in the system. Hardware refers to the physical hardware, such as controls, displays, and computers used within the system. Environment refers to natural, social, and economic environment in which the system is used, including culture. Liveware refers to the person(s) that work and interact within the system. In using the SHEL model, the interactions among the four components are considered with a focus on interactions that affect human performance within the system. Table 14.1 Benefits of Incorporating Human Factors at the Design Stage In the pipeline industry, human factors can create the potential for a human error at many points along the life cycle of a pipeline. At the Banff/2013 Pipeline Workshop, a tutorial on human factors was conducted using a life cycle analysis to identify where there is a potential for human error. Figure 14.2 illustrates the results of this discussion. Using a life cycle approach to manage human factors provides an organization with the capability to integrate human factors into programs, standards, procedures, and processes using a disciplined approach. To use the human factors life cycle, organizations may wish to assess themselves against the areas identified for the presence of programs, standards, procedures, and processes to manage human error potential. An examination of the potential for human error using a life cycle approach also highlights the interrelationship between human factors management and management systems. Common management system elements, such as change management and documentation, are reflected in each stage of the human factors life cycle. Opportunities exist to reduce the potential for human error in design, construction, operation, maintenance, and decommissioning through the use of robust quality control processes, such as self-assessments. Incorporation of human factors assessment techniques such as job hazard analysis, job task analysis, human factors failure modes and effect analysis, and workload analysis can help operators understand their current state and potential gaps from a desired state. Specialists in human factors can be engaged to assess and mitigate any/all of the areas identified in the life cycle provided in Figure 14.2. To illustrate this, an example case study is provided in the following. XYZ Pipeline Company has a pipeline integrity program that includes threat mitigation for corrosion, cracking, deformation and strain, third-party damage, and incorrect operations. XYZ Pipeline Company has decided to perform a gap analysis for the integration of human factors using the life cycle approach. In the “maintain” category of the life cycle, the company is confident that they use the best available technology to monitor and assess the integrity condition of their pipelines. They use Vendor A, who is a leader in inspection technology in the industry, to perform pipeline inspections and to provide detailed analyses on the results of these inspections. Using the life cycle approach, XYZ Pipeline Company evaluates how they have incorporated human factors into the identified areas. First, they ask whether there is a potential for human error in the determination of inspection frequency. The process used to determine inspection frequency involves a review process by at least three senior engineering professionals, and it is determined that the potential for an error at this point is minimized through the existing process. The next question asked is whether there is potential for human error in the selection of the inspection tool used to evaluate the threat. The existing process relies on the expertise and experience of integrity management engineers at the company. While today these individuals have over 20 years of industry experience, some are preparing to retire in the next 2–5 years. This risk is highlighted as a potential gap and action planning identifies the need to create a guideline for tool selection. During the discussions of this topic, it is also identified that the technology is changing rapidly and that understanding tool capability requires constant monitoring of the inspection market. Another action is then identified to create a work task for a senior engineer to monitor the inspection market at least annually to ensure the guideline is current. Next, they question the potential for an error in the inspection report that they base integrity decisions on. This discussion highlights several potential gaps, including a lack of quality control on the vendor reports received by XYZ Pipeline Company, a lack of understanding of the experience and expertise of the people performing the defect analysis within the tool inspection company, the lack of expertise in XYZ Pipeline Company in reading and understanding defect signals for all the inspection tools, and the lack of processes between the time the tool inspection is performed and the mitigation plan is prepared. Action planning for these gaps required creation of a small task force to focus on mitigating actions to manage these gaps. At this point, XYZ Pipeline Company decided that this was as far as they could progress in a single year, so a multiyear assessment protocol was developed to review the integrity management program over a 5-year term to assure integration and management of human factors through the life cycle of their pipelines. Figure 14.1 SHEL model. (Adapted from ICAO Doc 9859 Safety Management Manual (2012).) Figure 14.2 Human factors life cycle, Banff/2013 pipeline workshop. Human factors impact a pipeline system when a nonoptimal decision has been made. There are many factors that impact how information is processed and subsequent decision making. Human factors impact how we process information for decision making. The steps involved in decision making include receipt of information, processing of information, and deciding on a course of action (the decision). Receipt of information focuses on information cues, while processing of information focuses on memory and cognition [2]. Deciding on a course of action involves heuristics (simplifying decision-making tools) and biases. Information is received into the body as cues. These cues may be visual such as printed words in a procedures document, or auditory such as speech or alarms. The quality of the cues provided will increase the probability of receipt of the information into awareness and eventually action. Enhancement of cues can be provided through targeting, which involves making a cue conspicuous and highly visible while maintaining the norms for a cue. For example, if a cue to an operator to perform an action is a light turning on, then from a human factors perspective a few simple steps can ensure that the appropriate action is clear to the operator. First, an evaluation of what action the cue is supposed to elicit is required. If the light indicates an upset condition and the resulting action is to turn off a device, then the normal color anticipated by the operator would be red. If the light indicates a desired state and the action is to start up a piece of equipment, then the normal color anticipated by the operator would be green. If the colors used were not consistent with the expectations of the operator, then the likelihood of a human error such as an incorrect reaction to the cue is heightened. An example of expectancy is what we anticipate we will see at a traffic light. If we see the traffic light on the left-hand side of Figure 14.3, this meets our expectation of what a traffic light should look like, considered as meeting our norms. The traffic light on the right-hand side of the figure, however, does not meet our expectations and norms and would therefore be likely to contribute to a human error and subsequent traffic accident. The manner in which we process information is impacted through our memory systems. Prior knowledge is stored in long-term memory and information is manipulated in working memory. Figure 14.3 Expectancy. Working memory is like a shelf of products that are available for a short period of time but that require a lot of effort to maintain. Information processing depends on cognitive resources, which is a pool of attention or mental effort available for a short period of time that can be allocated to processes such as rehearsing, planning, understanding, visualizing, decision making, and problem solving. How much effort we put into working memory will be based on the value we place on the information. If we put effort into only a few areas, the result is “selective attention.” Effort may be influenced by fatigue, where the effort to perform a task is perceived to be greater than the anticipated value. There are some limitations to working memory based on its transitory nature and limited amount of cognitive resources. Working memory is limited to 7 ± 2 chunks of information [3], where a chunk of information may be grouped information such as a phone number, area code, or PIN for your computer. Working memory is also limited by time, decaying as time progresses. Another limitation of working memory is confusion of similar pieces or chunks of information, causing erroneous information to be processed. When translated to an operating environment, the amount of activity in the operating environment can impact the ability to process information in working memory effectively. Some tips for managing working memory are listed in Table 14.2. Long-term memory is a way to store information for retrieval at a later time. When we learn something, we are processing stored information into long-term memory. Training is specific instruction or procedures designed to facilitate learning. Long-term memory is distinguished by whether it involves memory for general knowledge (semantic memory) or memory for specific knowledge (event memory) [4]. Material in long-term memory has two important features that determine the ease of later retrieval: its strength and its associations. Strength is determined by the frequency and recency of its use. For example, a PIN or password used frequently will have strength, and if the PIN or password is associated with something relevant to the individual, it will also have an association and therefore be easier to recall. Table 14.2 Tips to Manage Working Memory Associations occur when items retrieved in long-term memory are linked or associated with other items—if associations are not repeated, the strength of association weakens over time. Tips for managing long-term memory are listed in Table 14.3. Metaknowledge or metacognition refers to people’s knowledge or understanding about their own knowledge and abilities. It impacts people’s choices, in the actions they choose to perform, the information or knowledge they choose to communicate, and choices of whether additional information is required to make a decision. Knowledge can be based on perceptual information and/or memory. The accuracy of information may be greater for perceptual information, but it may require greater effort than the use of information stored in memory. According to resource theory [5], we make decisions under limited time with limited information. Our scarce mental resources are shared by various tasks, and more difficult tasks require greater resources. Some concurrent tasks may therefore see a performance decline (see Table 14.4). Table 14.3 Tips to Manage Long-term Memory Table 14.4 Ways to Address a Multitasking Environment Information processing is influenced by stressors that are outside of the information itself. These stressors may be physical, environmental, or psychological, such as life stressors and work or time stressors [2]. Examples of common stressors include noise, vibration, heat, lighting, anxiety, and fatigue. The effect of stressors may be physical and/or psychological. Some stressors produce a physiological reaction, such as raised heart rate, nausea, or headache, while others may produce a psychological response such as mood swings. The duration and intensity of a stressor may lead to long-term negative effects on the health of the individual. In most cases, stressors affect information receipt and processing. Work overload occurs when there are more decisions to be made than cognitive resources available to make optimal decisions. When an overload is encountered, the decision maker becomes more selective in the receipt of cues. Limiting the inputs received through a selective attention filtering process allows the decision maker more cognitive resources for the task at hand—making a decision! Another result of work overload may be weighing information that is received to limit the amount of information that is processed. This will result in better use of available cognitive resources, if the screening is performed accurately. The filtering and screening processes will result in choosing the “easiest” decision. The results of this could be less accuracy in the decision-making process and potentially cognitive tunneling or fixation on a specific course of action [6]. Work overload can manifest in fatigue or in sleep disruption. Stress can occur because we have too many things to do in too short a time. Management of work overload as a stressor requires an evaluation of current workload [7]. To determine workload, consider which tasks need to be accomplished, when these tasks need to be performed, and how long the typical tasks take to accomplish. When considering the workload, consideration of time to perform a task needs to include cognitive tasks such as planning, evaluating alternatives, and monitoring success (Table 14.5). It is difficult to quantify the impact of workload. Will each individual perform a task exactly the same way? Will individuals process information and make decisions at the same speed? Are there other distractions or overlapping information that would impact the task and the speed of accomplishing a task? Table 14.5 Tips to Manage Workload Overload Fatigue is the state between being alert and being asleep. It may be mental, physical, or both in nature. In the pipeline industry, it is commonly understood that long shifts, in particular night shifts, may result in fatigue [8]. When fatigue occurs, performance level will be impacted. Management of fatigue includes scheduling rest breaks and enforcement of maximum hours to work in one day (Table 14.6). Sleep disruption is a major contributor to fatigue. Sleep disruption is influenced by the amount of time you sleep each night. Sleep deprivation occurs when an individual receives less than 7–9 h of sleep per night. For shift workers, sleep disruption occurs when tasks are performed at the low point of natural circadian rhythms, which equates to the early hours of the morning. Circadian rhythms are the natural cycles of the body regulated by light/darkness that impact physiological functions such as body temperature, sleeping, and waking [9]. For those who travel extensively, disruption of circadian rhythms may occur from jet lag. Understanding the impact of sleep disruption is important when evaluating the potential for a human error to occur. Tasks requiring input from visual cues are impacted by sleep disruption. Vigilance tasks, common for control center operations, may result in inattention and poor decision making as a result of sleep disruption. Table 14.6 Tips to Manage Fatigue Vigilance is defined as the detection of rare, nearthreshold signals in noise. In a control room, the rare, nearthreshold signal may be a small fluctuation in a process variable that precedes a larger change that is picked up by the alarm system. Flying an airplane is considered to be a vigilance task, with takeoff and landing as the two main tasks accomplished while in flight. Monitoring of the equipment gauges requires some mental effort, but action is only required when a nonstandard condition arises. Similar to this aviation example, in the pipeline industry a vigilance task may be monitoring a control panel in a control center. A concern with vigilance tasks is the increased number of misses that may occur in relation to the length of the vigilance task, called vigilance decrement. For a control center operator, management of alarms or other cues on a regular basis rather than having long periods of time between cues would reduce this concern. This and other tips for managing vigilance are listed in Table 14.7. Lighting and noise are two environmental stressors that can be remedied in a work environment (Table 14.8). Performance can be degraded when the amount of light and/or noise is too high or too low [2]. If continued high exposure (e.g., noise) or low exposure (e.g., light) occurs, then health issues may also result. These effects may not be obvious until some point in the future, so correlation with this stressor may be difficult. Table 14.7 Tips to Manage Vigilance Table 14.8 Tips to Manage Lighting and Noise Readers who wish to apply the above tips can refer to the following guidance documents: Vibration is an environmental stressor that can lead to both performance issues and health issues (e.g., repetitive motion disorders and motion sickness) [10]. High-frequency vibration can impact either a specific portion of the body or the whole body. Types of tasks that affect whole-body vibration include off-road driving and working around large compressors or pumps. The impact of vibration that affects the whole body includes stress, risk of injury [10], difficulty using devices such as touchscreens or handheld devices, difficulty with eye–hand coordination, and blurred vision. At lower vibration frequencies, the impact of vibration can be motion sickness that in turn impacts performance through inattention. Tips for managing vibration are listed in Table 14.9. Temperature impacts performance and health when it is either too low or too high [11]. Both excessive heat and excessive cold can produce performance degradation and health problems. Health issues can include a physical reaction to excessively high or low temperatures, such as heatstroke and frostbite. The performance impact can be cognitive, focusing on how information is processed by an individual. For managing problems related to temperature, some tips are listed in Table 14.10. Table 14.9 Tips to Manage Vibration Table 14.10 Tips to Manage Temperature Air quality issues can negatively impact performance and health of an individual. Air quality is not limited to internal spaces, such as an office, but also external spaces such as a facility. Poor air quality can result from inadequate ventilation, pollution, smog, or the presence of carbon monoxide. Poor air quality can impact cue recognition, task performance, and decision-making capability [12]. Psychological stress can be triggered by a perception of potential harm or potential loss of something of value to the individual. It is extremely difficult to design for this type of stressor, since it is related to perception, and perception varies with individuals. The natural question is “What impacts the perception of harm or loss to an individual?” The way a person can interpret a situation will impact the stress level they feel. Cue recognition and information processing are key elements of this interpretation. Psychological stress is a real phenomenon that is hard to express and manage (Table 14.11). Physiological reactions may occur due to the psychological stressors, such as elevated heart rate. At an optimal amount of psychological stress, we can see performance improvement. When this optimal level is exceeded, however, the result can be elevated feelings of anxiety or danger, or “overarousal.” Information processing when in a state of overarousal can be altered, impacting memory and decision-making capability. Life itself may be stressful outside the work environment. Life events, such as a health concern with a loved one, can impact work performance and potentially cause an incident. When an incident occurs related to human factors, the classification may be “inattention.” The cause of inattention is likely the diversion of attention from work tasks and toward the source of life stress. Some tips for managing life stress are listed in Table 14.12. Table 14.11 Tips to Manage Psychological Stress Table 14.12 Tips to Manage Life Stress Guidelines for the management of working memory are available in other industries, including aviation, medicine, and road transportation. The following are some examples of references for the reader to explore and apply. Decision making is a task involving selection of one option from a set of alternatives, with some knowledge and some uncertainty about the options. When we make decisions, we plan and select a course of action (one of the alternatives) based on the costs and values of different expected outcomes. There are various decision-making models in the literature, including rational or normative models, such as utility theory and expected value theory, and descriptive models [13, 14]. If there is good information and lots of time available to make a decision, a careful analysis using rational or normative models is possible. If the information is complex and there is limited time to make a decision, simplifying heuristics are often employed. The focus of this section is on descriptive models such as heuristics and biases. When decisions are made under limited time and information, we rely on less complex means of selecting among choices [15, 16]. These shortcuts or rules of thumb are called heuristics. The choices made using heuristics are often deemed to be “good enough.” Because of the simplification of information receipt and processing employed using heuristics, they can lead to flaws or errors in the decision-making process. Despite the potential for errors, use of heuristics leads to decisions that are accurate most of the time. This is largely due to experience and the resources people have developed over time. Cognitive biases result when inference impacts a judgment. Biases can result from the use of heuristics in decision making. There are a number of specific heuristics and biases that will be explored in this section, including: As may be surmised from the name, the satisficing heuristic is related to a decision that satisfies the needs of the user. The person making the decision will stop the inquiry process when an acceptable choice has been found, rather than continuing the inquiry process until an optimized choice has been found [17]. This heuristic can be very effective, as it requires less time. As it does not provide an optimal solution, however, it can lead to biases and poor decisions. This heuristic can be advantageous when there are several options for achieving a goal and a single optimized solution is not required, such as a decision on providing an example of a data set for display in a presentation. This heuristic could lead to less than optimal result when the decision requires an extensive review to determine a single solution, such as a decision to choose a method for defect growth prediction. This heuristic is illustrated when the first few cues that have been received receive a greater weight or importance versus information received later [15]. This leads the decision maker to support a hypothesis that is supported by information received early. This heuristic could lead to decision errors if extensive analysis or increased depth of analysis occurs as part of the inquiry process. For example, the use of cue primacy and anchoring would not be appropriate for decision making during a root cause investigation. Selective attention occurs when the decision maker focuses attention on specific cues when making a decision [2]. A decision maker has limited resources to process information, so we naturally “filter out” information and focus attention on certain cues. If an operator has many alarms occurring at once, then focusing on a few selective cues/alarms influences the action that is taken. Selective attention is impacted by four factors—salience (prominence or conspicuous), effort, expectancy, and value. Cues that are more prominent, such as cues at the top of a display screen, will receive attention when there are limited resources and become the information used to make a decision. Selective attention is an advantage in a well-designed environment where many potential cues are present. However, it can lead to poor decision making if the important cues are not the most salient in the environment. The meaning of the availability heuristic is represented by its name. Decision makers generally retrieve information or decision-making hypotheses that have been considered recently or frequently [18]. This information comes foremost to the mind of the decision maker and decisions can be made based on the ability to recall this information easily and quickly. For example, if a pipeline designer has recently completed a design for a pipe in rocky conditions and then asked what considerations should be included in the design of a different pipeline, the designer will readily recall soil conditions and comment that this should be considered for the new project. This heuristic is of great benefit when brainstorming various options to consider, but can be limiting if a full analysis of all options is required. Once again, the meaning of the representativeness heuristic is as its name implies. Decision makers will focus on cues that are similar to something that is already known [16]. The propensity is to make the same decision that was successful in a previous situation, since the two cues “look the same.” For example, when an analyst is evaluating in-line inspection (ILI) data, he/she may see two signals that look very similar and due to the representativeness heuristic use the same characterization for both features. When the two images are identical, this heuristic provides a fast and effective means of decision making, or in this case characterizing features. If, however, the signals are similar but not identical, then the representativeness heuristic may lead to a decision-making error. Cognitive tunneling may be colloquially known as “tunnel vision.” In this instance, once a hypothesis has been generated or a decision chosen to execute, subsequent information is ignored [19]. In decision making, cognitive tunneling can easily lead to a decision error. To avoid cognitive tunneling, the decision maker should actively search for contradictory information prior to making a decision. For example, asking for a “devil’s advocate” opinion is one means of testing a hypothesis prior to finalizing a decision. In confirmation bias, the decision maker seeks out information that confirms a hypothesis or decision [20]. Similar to cognitive tunneling, focusing only on confirming evidence, and ignoring other evidence that could lead to an optimal decision, can lead to a decision error. When relying on memory, a tendency to fail to remember contradictory information, or to underweigh this evidence in favor of confirming evidence, impacts our ability to effectively diagnose a situation, prepare a hypothesis, and subsequently make an optimal decision. The main difference between cognitive tunneling and confirmation bias is that cognitive tunneling ignores all information once a hypothesis or decision has been chosen. With confirmation bias, however, information is filtered for only that information that confirms the chosen hypothesis or decision. While many of the biases that occur are on a subconscious level, it is possible to use framing bias deliberately when trying to influence others. Framing bias involves influencing the way material is received to impact the decision that is made by another person [21]. For example, when writing a procedure about a requirement to perform an activity under specific circumstances, there is a framing bias imposed if the wording indicates the activity is not done unless certain criteria are met instead of that the activity is done when certain criteria are met. The decision maker is influenced to decide that the activity is not performed, rather than performed, based on the selected wording. Table 14.13 Factors that Influence Decision Making Some factors that influence decision making are listed in Table 14.13. The most effective means of correcting poor decisions is through feedback that is clearly understood with some diagnostics included. In many organizations, the largest contributor to incidents involves motor vehicles. When driving, it is difficult to learn from previous behavior and make better decisions due to the lack of feedback received while performing the task. When operating a pipeline, the operator receives feedback through alarms to indicate if a decision was effective. The alarm feedback may not lead to an optimal decision; however, the use of simulators to provide feedback enhances optimal decision making. There are many ways, as we have described, to negatively impact decision making and subsequently increase the potential for human error to occur. The process of making a decision is influenced by design, training, and the presence of decision aids [2]. We often jump to the conclusion that poor performance in decision making means that we must do something “to the person” to make him or her a better decision maker. The category of “human error,” when used during an incident investigation, tends to result in activities related to the person who was performing the task at the time the incident occurred. A more holistic approach would be to consider the human being as well as opportunities to improve the way this human being could make a decision in a similar situation. Such an approach involves, potentially, task redesign, the use of decision support systems, and training. A decision support system is an interactive system designed to improve decision making by extending cognitive decision-making capabilities. These support systems can focus on the individual, using rules or algorithms that require a consistent decision to be made by an individual. For tasks that result in a pass/fail decision with little deviation in the information received this approach works well, since decision consistency is more important than optimizing decisions made in unusual situations [22]. Focus on the individual can fail when there is a potential for unusual/nonroutine information receipt or when data entry mistakes can occur. Alternatively, decision support systems can focus on tools to support decision making. For tasks that require the ability to make decisions in the presence of unusual/nonroutine information, this type of support system can complement the human in the decision-making process. Examples of tools to support decision making include decision matrices for a risk management approach to decision making, spreadsheets to reduce cognitive loading, simulations to perform “what-if analyses,” expert systems such as computer programs to reduce error for routine tasks, and display systems to manage visual cues. Some of these will utilize automation, so it is important to keep in the mind the potential to create vigilance tasks. Balancing the amount of automation for optimized decision making is an art rather than a science. On July 3, 1988, the USS Vincennes accidentally shot down an Iranian airbus (Flight 655) resulting in the death of 290 people [23, 25]. This occurred in the Strait of Hormuz in the Persian Gulf. Aboard the ship, the cruiser was equipped with sophisticated radar and electronic battle gear called the AEGIS system. The crew tracked the oncoming plane electronically, warned it to keep away, and, when it did not, fired two standard surface-to-air missiles. The Vincennes’ combat teams believed the airliner to be an Iranian F14 jet fighter. No visual contact was made with the aircraft until it was struck and blew up about 6 mi. from the Vincennes. Although the United States and Iran were not at war, there were some short but fierce sea battles in the Gulf between the United States and Iran. The year before, on May 17, there was an incident involving the near-sinking of the USS Stark by an Iraqi fighter-bomber. The Iraqi fighter launched a missile attack that killed 37 U.S. sailors. Information recently received from the U.S. intelligence predicted a high-profile attack for July 4th, the day after this incident occurred. The USS Vincennes and another U.S. vessel were in a gunfight with an Iranian gunboat when the “fighter” appeared on the radar screen. The airstrip from which the airbus left was the same airstrip used by military aircraft. Vincennes tried to determine “friend or foe” using electronic boxes that are in military planes. These warnings were sent out on civilian and military channels with no response from the airbus. The airbus flight path was over the Vincennes and during the flight path the crew of the Vincennes interpreted the airbus to be descending. The resulting decision was for the Vincennes to launch two missiles resulting in the destruction of the airbus and all passengers. To understand the human factors at play, it is important to understand the cues that were perceived and how these cues impacted the decision-making process. It was noted that the radio was using the “friend or foe” on the military channel because the operator had earlier been challenging an Iranian military aircraft on the ground at Bandar Abbas, and had overlooked to change the range setting on his equipment. Flight 655 was actually transmitting IFF Mode III, which is the code for a civilian flight. Although the warning was broadcast from the USS Vincennes and the cue received by the airbus crew, the civilian frequency warning was ignored. The airbus was interacting with air traffic control and likely did not suspect that the military channel warning was intended for them. Noise and activity level impacted the auditory cues as some officers thought the airbus was commercial, while others thought it was a fighter jet, but only the first information (fighter jet) was “heard.” Several heuristics impacted the decision that was made to launch the two missiles. In understanding the events that led up to this event, it is easy to determine that they “should have known better” or use hindsight bias. The presence of information that indicated a potential attack, under stressful environmental and psychological conditions coupled with limited time for decision making, resulted in the use of heuristics and subsequent decision errors. Could human factors impact the pipeline industry? It has in the past. From incident reports on the National Transportation Safety Board (NTSB) website (http://www.ntsb.gov/investigations), the following examples were found: The NTSB determined that the probable cause of the rupture and fire was a contractor’s puncturing the unmarked, underground natural gas pipeline with a power auger. Contributing factors were the lack of permanent markers along the pipeline and failure of the pipeline locator to locate and mark the pipeline before the installation of a utility pole in the pipeline right-of-way. From a human factors perspective, the presence of visual cues to make decisions is critical. Unmarked pipelines represent a lack of visual cues, which contributed to an incident. The NTSB determined that the probable cause of the accident was environmentally assisted cracking under a disbonded polyethylene coating that remained undetected by the integrity management program. Contributing to the accident was the failure to include the pipe section that ruptured in their integrity management program. Contributing to the prolonged gas release was the pipeline controller’s inability to detect the rupture because of SCADA system limitations and the configuration of the pipeline. From a human factors perspective, the ability of the operator to make a decision about the presence of a leak was impacted by the visual cues provided by the SCADA system. The NTSB determined that the probable cause of the pipeline rupture was corrosion fatigue cracks that grew and coalesced from crack and corrosion defects under disbonded polyethylene tape coating, producing a substantial crude oil release that went undetected by the control center for over 17 h. The rupture and prolonged release were made possible by pervasive organizational failures that included the following: From a human factors perspective, following a human factors life cycle approach can minimize the potential for human error and decision-making flaws. Additionally, the ability of an operator to make decision based on visual cues is highlighted. This incident also illustrates the need to consider human factor interfaces external to an organization, including the public and emergency responders. The NTSB determined that the probable cause of the accident was inadequate quality assurance and quality control in 1956 during a line relocation project, which resulted in the installation of a substandard, poorly welded pipe section with a visible seam weld flaw. Over time, the flaw grew to a critical size, causing the pipeline to rupture during a pressure increase stemming from poorly planned electrical work; an inadequate pipeline integrity management program failed to detect and repair the defective pipe section. Contributing to the severity of the accident were the lack of either automatic shutoff valves or remote control valves on the line and flawed emergency response procedures and delay in isolating the rupture to stop the flow of gas. Similar to the previous example, following a human factors life cycle approach can minimize the potential for human error and decision-making flaws. In addition to integrity management, the ability to make decisions under emergency conditions, and the decision-making tools required to make optimized decisions, is highlighted. Application of human factors in an organization using the life cycle approach may occur in various ways based on the size and complexity of an organization. Some common elements to consider in the development of a human factors management plan/program include the following: There are many tools and techniques available to support an organization to integrate human factors into various areas of the business. While not exhaustive, the list below provides examples that can be considered. In the oil and gas pipeline industry, everything we do requires some level of human intervention, from designing an asset, to turning a valve, to operating the pipeline, to analyzing data related to pipe condition. Understanding as an organization where these human interventions occur and ensuring some level of evaluation of the potential for human error in decision making is critical to the reduction in the frequency and/or magnitude of errors associated with human factors. An understanding of human factors, including what people see, what they hear, what they interpret, and subsequently how they respond can help an engineer to design systems and processes that reduce the potential for human error. The human factors life cycle approach illustrated in this chapter is an important tool in the journey toward holistic design, construction, operation, maintenance, and decommissioning of reliable systems. Human factors information is available in a number of journals, including Human Factors, Human Factors and Ergonomics in Manufacturing, and Human–Computer Interaction. Some articles that may be of interest to readers include the following: There are a number of books and articles in addition to those identified in the reference section that may be useful in furthering your understanding of human factors, including the following:

14

Human Factors

14.1 Introduction

14.2 What Is “Human Factors”?

14.3 The Human in the System

14.4 Life Cycle Approach to Human Factors

14.4.1 Example Case Study

14.5 Human Factors and Decision Making

14.5.1 Information Receipt

14.5.2 Information Processing

Method

Results/impact

1) Task redesign

Reduce working memory limitations

2) Interface redesign

Direct selective attention (e.g., use voice for tasks when eyes are required for a different task)

3) Training

Develop automaticity through repeated and consistent practice; improve resource allocation through training in attention management

4) Automation

Limit resource demands through number of tasks; direct selective attention

14.5.2.1 Stressors

Work Overload

Fatigue

Vigilance

Lighting and Noise

Vibration

Temperature

Air Quality

Psychological Stress

Life Stress

14.6 Application of Human Factors Guidance

14.7 Heuristics and Biases in Decision Making

14.7.1 Satisficing Heuristic

14.7.2 Cue Primacy and Anchoring

14.7.3 Selective Attention

14.7.4 Availability Heuristic

14.7.5 Representativeness Heuristic

14.7.6 Cognitive Tunneling

14.7.7 Confirmation Bias

14.7.8 Framing Bias

14.7.9 Management of Decision-Making Challenges

14.7.9.1 Methods to Improve Decision Making

14.7.9.2 Case Study: USS Vincennes

Overview

Cue Analysis

Heuristics Analysis

Case Study Summary

14.8 Human Factors Contribution to Incidents in the Pipeline Industry

14.9 Human Factors Life Cycle Revisited

14.10 Tools and Methods

14.11 Summary

References

Bibliography